An essential triage to internet traffic with practical security features, load balancers is the gatekeeper that directs web-based traffic to the best available servers for optimal application efficiency. This process involves different algorithms, all come with unique pluses. And in this post, you will read all about them so you can make the most of our balancers.

Round Robin

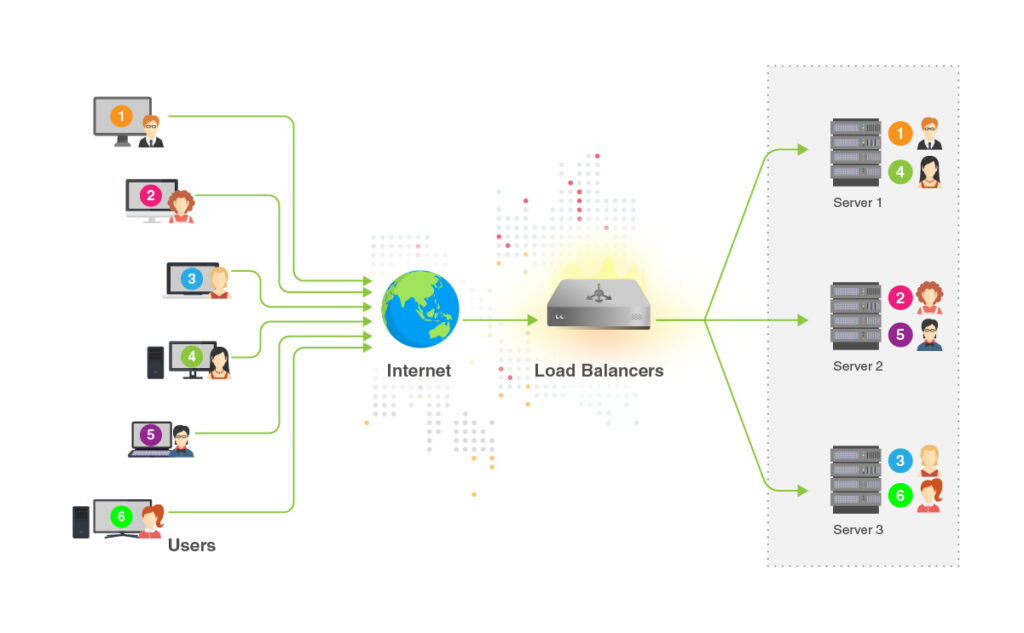

This is the most common algorithm where all available servers form a queue. When a new request comes in, the load balancers forward it to the first server in the queue. Upon the next request, the balancers distribute the traffic to the next server in the list.

The below diagram gives you a picture of how this works. Say we have an environment with three available servers, the first client’s request (1) received by the balancer is assigned to server 1. The next request (2) is then assigned to the next server in turn, namely server 2. When the balancer finishes routing the third request and reaches the bottom of the server list, it directs the next client (4) to the first on the list again, which is server 1. And the cycle continues.

It is the simplest and the easiest algorithm to be implemented where each server handles a similar amount of workload, making sure there is no overload or starvation of server resources.

Least Connections

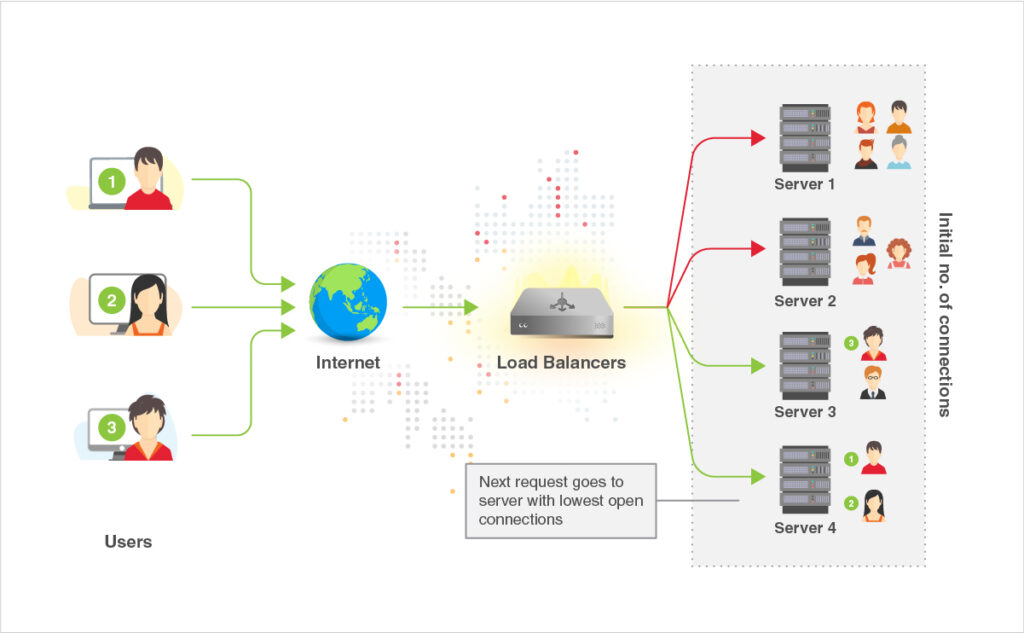

The name says it all – the balancers monitor the current capacity of each available server, and assigns new requests to the one with the fewest active connections.

In the diagram below, servers 1 and 2 are serving a higher demand of requests. Therefore, when client 1 comes in, their request is directed to server 4 as it is currently idle. The next client (2) is assigned to server 4 as it is now one of the two servers (3 and 4) with the least connections. Now, with server 4 having two connections while server 3 having just one, the third incoming client request is routed to server 3 – the one with the least active connections.

This intelligent mechanism ensures all requests are handled in the most effective manner possible, and is more resilient to heavy traffic and demanding sessions.

Source-based

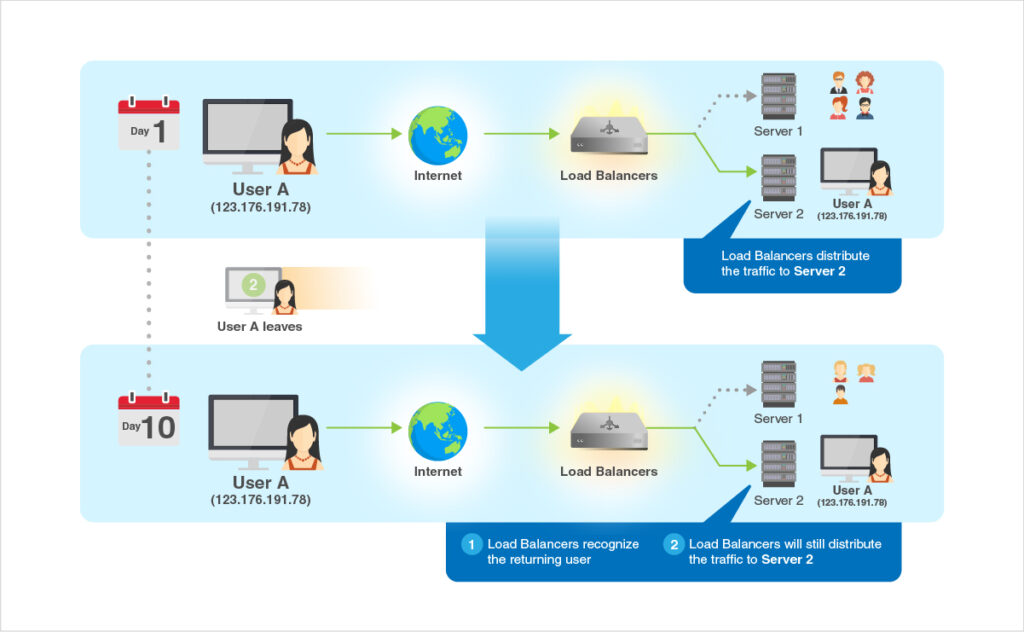

In similar nature to least-connection, source-based algorithm pairs certain requests with the client’s IP address. Once you set up the rules in the LayerPanel, our load balancers will route the workloads accordingly.

For instance, the balancer recognizes the IP address that you have previously specified, and autonomously directs the requests from that specific client to a specific server – server 2 – in the diagram below. When the same client returns a few days later with a new request, the balancer recognizes its IP address and will distribute the request to the same server.

This algorithm provides you with the flexibility to group certain application-specific tasks together or slightly tailor the environment best to process specific requests. This allows your application to handle requests with desirable resources and reach desirable, more predictable results.

Want more details on how to configure Load Balancers for LayerStack’s cloud servers? Read our tutorials and product docs.

Related Content:

If you have any ideas for improving our products or want to vote on other ideas so they get prioritized, please submit your feedback on our Community platform. Feel free to pop by our community.