In the ever-evolving landscape of artificial intelligence (AI), where transformative breakthroughs are the norm, the need for robust computing solutions has never been more critical. At the forefront of this demand stands the NVIDIA A40, a groundbreaking model poised to redefine the capabilities of AI processing and drive the industry into the next era of innovation.

Unveiling the NVIDIA A40

As AI continues to permeate diverse industries, from healthcare to finance, and from manufacturing to entertainment, the demand for accelerated computing has reached unprecedented heights. The NVIDIA A40 emerges as a game-changer, embodying the pinnacle of AI power through its cutting-edge features and unmatched performance.

NVIDIA Ampere Architecture

At the heart of the A40 lies the NVIDIA Ampere Architecture, a technological marvel that revolutionizes processing capabilities. The architecture introduces CUDA Cores, offering double-speed processing for single-precision floating-point operations (FP32). This translates to significant performance improvements for graphics and simulation workflows, such as complex 3D computer-aided design (CAD) and computer-aided engineering (CAE).

The second-generation RT Cores boast up to 2X the throughput over the previous generation, enabling concurrent ray tracing with shading or denoising capabilities. This breakthrough technology accelerates workloads like photorealistic rendering of movie content, architectural design evaluations, and virtual prototyping of product designs.

Incorporating third-generation Tensor Cores, the A40 supports new Tensor Float 32 (TF32) precision, delivering up to 5X the training throughput compared to its predecessors. This empowers AI and data science model training without requiring any code changes, showcasing NVIDIA’s commitment to advancing AI capabilities seamlessly.

Massive GPU Memory for Unprecedented Workloads

One of the standout features of the A40 is its 48GB of ultra-fast GDDR6 memory. Scalable up to 96GB with NVLink, this expansive memory capacity caters to the needs of data scientists, engineers, and creative professionals dealing with massive datasets and resource-intensive workloads like data science and simulation. The A40 is not just a GPU; it’s a powerhouse ready to tackle the most demanding tasks.

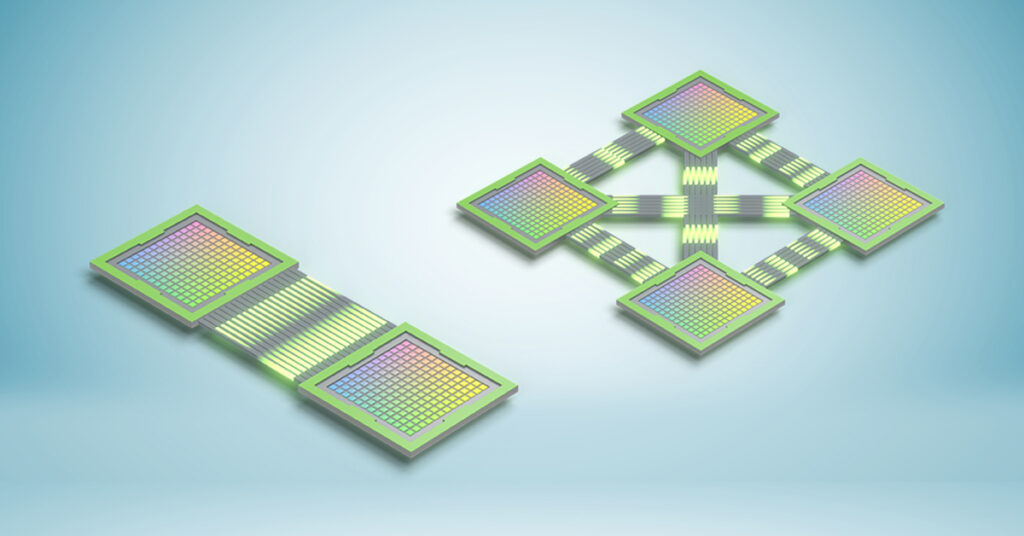

Third-Generation NVIDIA NVLink: Scaling Possibilities

Connectivity is key in the AI era, and the A40 addresses this with its third-generation NVIDIA NVLink. By allowing the connection of two A40 GPUs, users can scale from 48GB to an impressive 96GB of GPU memory. This increased GPU-to-GPU interconnect bandwidth provides a single scalable memory, unlocking the potential to accelerate graphics and compute workloads and handle larger datasets. The new, more compact NVLink connector enhances functionality across a wider range of servers, ensuring versatility in deployment.

Virtualization-Ready: A Glimpse into the Future

Recognizing the evolving landscape of AI applications, the A40 is virtualization-ready, boasting next-generation improvements with NVIDIA virtual GPU (vGPU) software. This advancement allows for larger, more powerful virtual workstation instances for remote users, facilitating high-end remote design, AI, and compute workloads. NVIDIA’s commitment to virtualization demonstrates its foresight in adapting to the changing needs of AI professionals.

PCI Express Gen 4: Accelerating Data Transfer

The A40 is future-ready with PCI Express Gen 4, doubling the bandwidth of PCIe Gen 3. This improvement significantly enhances data-transfer speeds from CPU memory, a crucial aspect for data-intensive tasks like AI, data science, and 3D design. Faster PCIe performance not only accelerates GPU direct memory access (DMA) transfers but also ensures deployment flexibility by maintaining backward compatibility with PCI Express Gen 3.

Data Center Efficiency and Security: A Balance of Power and Trust

Efficiency and security are paramount in data center operations, and the A40 excels in both. Featuring a dual-slot, power-efficient design, the A40 is up to 2X as power-efficient as its predecessors, validated with a wide range of NVIDIA-Certified systems from global OEMs. The A40 also prioritizes security, offering a measured boot with a hardware root of trust capability. This ensures that firmware remains untampered with or corrupted, instilling confidence in the integrity of AI operations.

A Comprehensive Solution for Professionals: Specifications and Professional Features

Let’s delve into the specifications and professional features that make the NVIDIA A40 the go-to solution for professionals across various industries:

- GPU Memory: 48GB GDDR6 with error-correcting code (ECC)

- GPU Memory Bandwidth: 696 GB/s

- Interconnect: NVIDIA NVLink 112.5 GB/s (bidirectional), PCIe Gen4: 64GB/s

- NVLink: 2-way low profile (2-slot)

- Display Ports: 3x DisplayPort 1.4

- Max Power Consumption: 300 W

- Form Factor: 4.4″ (H) x 10.5″ (L) Dual Slot

- Thermal: Passive

- vGPU Software Support: NVIDIA Virtual PC, NVIDIA Virtual Applications, NVIDIA RTX Virtual Workstation, NVIDIA Virtual Compute Server, NVIDIA AI Enterprise

- vGPU Profiles Supported: Refer to the Virtual GPU Licensing Guide

- NVENC | NVDEC: 1x | 2x (includes AV1 decode)

- NEBS Ready: Level 3

- Power Connector: 8-pin CPU

Professional Features: Beyond Expectations

The A40 isn’t just a GPU; it’s a comprehensive solution catering to the needs of professionals in various fields:

Immersive VR Experiences

Powering the most immersive augmented reality (AR) and virtual reality (VR) experiences on high-resolution head-mounted displays (HMDs), the A40 excels in graphics and display bandwidth. The four-way VR SLI capability ensures peak performance, assigning two NVLink connected GPUs to each eye, setting a new standard for immersive experiences.

Quadro Sync: Synchronized Visualization

Synchronize multiple A40 GPUs with displays or projectors seamlessly using NVIDIA Quadro Sync technology. This capability allows the creation of large-scale visualizations, ideal for scenarios where precision and synchronization are paramount.

Video Encode and Decode: Unmatched Multimedia Capabilities

Dedicated video encoder (NVENC) and decoder engines (NVDEC) empower the A40 with the performance needed to work with multiple video streams simultaneously. This feature accelerates video export, multi-stream video applications, and serves applications in broadcast, security, and video streaming.

Enterprise Drivers: Optimal Performance and Stability

Virtual workstations powered by Quadro Virtual Data Center Workstation (Quadro vDWS) software ensure optimal performance and stability. Extensive testing across a broad range of industry applications and certifications from over 100 independent software vendors (ISVs) guarantees reliability for professionals across diverse domains.

The A40 in Action: Real-World Applications

The capabilities of the NVIDIA A40 extend far beyond impressive specifications. Let’s explore how this powerhouse contributes to various real-world applications, propelling industries into the future.

Rendering: Accelerating Final Frame Rendering

The A40 accelerates final frame rendering, whether through bare-metal or virtual workstation instances. Scenes that once took considerable time on traditional CPUs now render in a fraction of the time, highlighting the A40’s prowess in graphics-intensive tasks.

Computer-Aided Design: Compressing Design Cycles

For professionals in the realm of computer-aided design (CAD), the A40 provides a game-changing solution. Through the integration of RTX Virtual Workstations, the A40 reduces design cycles and slashes unit costs. The performance is indistinguishable from physical workstations, ensuring that design iterations happen seamlessly and efficiently.

3D Production: Fast-Tracking Visual Effects

In the world of 3D production for films and visual effects, time is of the essence. The A40, with its virtual workstations accessible from anywhere, empowers creative professionals to produce high-quality visual effects for blockbuster films faster and within budget. The flexibility of accessing virtual workstations opens up new possibilities for collaboration and efficiency.

AR/VR Development: Accelerating Time to Visualization

Augmented reality (AR) and virtual reality (VR) experiences demand cutting-edge graphics and increased display bandwidth. The A40 not only meets but exceeds these demands, accelerating the time to visualization at the edge. A full-stack solution allows for the running and scaling of XR applications to untethered devices, anywhere, across 5G networks.

Engineering Simulation: Iterating Faster, Computing Smarter

In engineering simulations, speed and iteration are crucial. The A40, when combined with RTX vWS, enables professionals to set up, test, and iterate on complex simulations at an accelerated pace. Design during the day and compute during the night, all with the power of NVIDIA GPUs.

Geospatial Analysis: Access to High-End Cartography

For professionals engaged in geospatial analysis, immediate access to high-end cartography is a necessity. The A40, with its accelerated virtual workstations, provides both the computational power and access required for intensive geospatial analysis. This opens up new horizons for professionals dealing with complex mapping and analytical tasks.

Remote Collaboration: Streamlining 3D Production

In the era of remote work, collaboration is key. The A40 streamlines 3D production by bringing RTX capabilities to third-party applications through the NVIDIA Omniverse digital collaboration platform. This not only enhances collaboration but also addresses security concerns by eliminating the need to distribute sensitive files globally.

Game Development: Creating Entertainment from Anywhere

For game developers, the A40 enables the creation of high-quality gaming entertainment from anywhere. Leveraging a scalable, secure, and centrally managed infrastructure, game development teams can unlock new possibilities and deliver immersive experiences to gamers around the world.

The NVIDIA EGX Platform: Redefining Professional Visualization

The NVIDIA A40, along with NVIDIA vGPU software, takes center stage in the next-generation NVIDIA EGX platform certified for professional visualization. This platform delivers the performance and features needed to power professional graphics and computing anywhere. As creative and technical professionals face increasingly complex challenges, the EGX platform provides a standardized architecture for running any workload, from rendering to simulation.

Addressing the Challenges of Tomorrow: AI Collaboration with Google Cloud

Recognizing the barriers many organizations face in adopting generative AI, NVIDIA collaborates with Google Cloud to propel the industry forward. The Google Cloud and NVIDIA partnership aim to accelerate generative AI and modern AI workloads in a cost-effective, scalable, and sustainable manner.

Generative AI Support in Vertex AI

In March, Google Cloud introduced Generative AI support in Vertex AI, a significant step in providing developers access to, tune, and deploy foundation models. The collaboration extends further, with Google Cloud being the first to offer the NVIDIA L4 Tensor Core GPU, purpose-built for large inference AI workloads like generative AI. This integration delivers cutting-edge performance-per-dollar, with the new G2 VM instances powered by NVIDIA L4 offering up to 4X more performance than previous-generation instances.

NVIDIA AI Enterprise Software on Google Marketplace

The collaboration extends beyond hardware to software, with NVIDIA AI Enterprise software available on Google Marketplace. This suite of software accelerates the data science pipeline, streamlining development and deployment of production AI. With support for a wide range of frameworks, pretrained models, and development tools, NVIDIA AI Enterprise simplifies AI, making it accessible to every enterprise.

Access to Open Source Tools

The collaboration focuses on making a wide range of GPUs accessible across Vertex AI’s Workbench, Training, Serving, and Pipeline services. This support caters to various open-source models and frameworks, allowing organizations to accelerate Spark, Dask, and XGBoost pipelines or leverage PyTorch, TensorFlow, Keras, or Ray frameworks for larger deep learning workloads. This approach ensures a managed and scalable way to accelerate the machine learning development and deployment lifecycle.

GPU-Accelerated Spark for Dataproc Customers

Acknowledging the diversity of AI workloads, Google has partnered with NVIDIA to make GPU-accelerated Spark available to Dataproc customers. Utilizing the RAPIDS suite of open-source software libraries, customers can tailor their Spark clusters to AI and machine learning workloads, enhancing efficiency in data preparation and model training.

Reducing Carbon Footprint of Intensive AI Workloads

In an era where environmental considerations are paramount, Google and NVIDIA are committed to reducing the carbon footprint of intensive AI workloads. Choosing the cleanest cloud infrastructure and partnering with NVIDIA to offer energy-efficient GPUs are steps in the right direction. The A40, coupled with strategic GPU choices and data center location considerations, can reduce the carbon emissions of AI/ML training by as much as 1,000x.

The Next Frontier: NVIDIA GH200 Grace Hopper Superchip

As the AI landscape continues to evolve, NVIDIA remains at the forefront with its next-generation GH200 Grace Hopper Superchip platform. Designed to handle the world’s most complex generative AI workloads, the GH200 builds upon the success of its predecessor, the H100.

Enhanced Memory Capacity

The GH200 retains the powerful GPU of the H100 but introduces a significant enhancement with 141 GB of memory, compared to the H100’s 80 GB. This boost in memory capacity is a testament to NVIDIA’s commitment to meeting the escalating demands of AI developers and researchers.

Versatile Configurations for Varied Workloads

Recognizing the diverse needs of data centers, the GH200 offers various configurations, including a dual configuration that combines two GH200s. This dual setup provides 3.5x more memory capacity and 3x more bandwidth than the current generation, offering unprecedented versatility for handling large-scale AI workloads.

The GH200’s Role in Data Centers

Designed for data centers operated by cloud service providers, the GH200 addresses the surging demand for generative AI. Its exceptional memory technology and bandwidth improvement contribute to enhanced throughput, ensuring that data centers can deploy the GH200 across various scenarios without compromising performance.

A Future Defined by NVIDIA’s Commitment to AI Excellence

In conclusion, the NVIDIA A40 stands as a testament to NVIDIA’s unwavering commitment to excellence in AI computing. From its powerful Ampere Architecture to the innovative features addressing real-world challenges, the A40 is not just a GPU; it’s a catalyst for the future of AI.

As the industry embarks on the next era of generative AI and complex workloads, the collaborative efforts with Google Cloud further solidify NVIDIA’s position as a leader in AI solutions. The introduction of the GH200 Grace Hopper Superchip platform extends the legacy, promising even greater capabilities to meet the evolving needs of AI developers and researchers.

In a world where AI is no longer a luxury but a strategic imperative, NVIDIA continues to pave the way for transformative change. The epoch-making chip powering the AI era is not just a piece of hardware; it’s a symbol of innovation, collaboration, and a commitment to shaping a future where AI transcends boundaries and unlocks new possibilities for us all.